If you’ve been experimenting with AI agents, you’ve probably noticed something:

Once they start doing more than just answering prompts things can get messy.

It can be during any type of operation. These could including parsing logs, provisioning infrastructure, or creating tickets, you eventually run into a wall:

Context gets bloated

Actions become unpredictable

Model calls are expensive due to searches and scrapes of web pages, etc

And you have no way to control or reproduce what happened

This is where a magical component of AI agents comes into play, the MCP server which is short for Model Context Protocol server. When you incorporate MCP servers into the mix, things will get faster, more accurate, and predictable.

What is the Model Context Protocol (MCP)?

Let’s take a step back and see exactly what an MCP server is exactly. The Model Context Protocol is an emerging open standard, originally drafted by Anthropic, for structuring, managing, and delivering context to large language models (LLMs). This is especially designed for using agents.

This standard is now used in solutions like Terraform MCP and provides a machine-readable, versioned, and structured way to pass context into AI models.

Instead of jamming everything into a giant prompt string, MCP organizes the key components of context:

Instructions – What the user wants

Memory – Persistent data from the session or history

Tools – What capabilities the agent has access to

Files – Any user uploads or documents

User Info – Role, permissions, preferences

It turns context into a clean interface, not just a pile of text.

Why this matters for agents

Traditional prompting is chaotic. Agents often stuff the entire world into the input window. Things like 8,000 tokens of logs, 3 unrelated documents, raw HTML from a search scrape, random tool descriptions, the user’s actual question buried at the bottom, or something else that can be obscure.

This can lead to unexpected or unwanted outcomes. These include:

High token usage and cost

Model confusion and hallucinations

Unreliable, non-reproducible results

MCP helps to fix this by defining exactly what goes where and how it’s interpreted by the model. That structure helps to improve clarity, reduces overhead, and enables agents to behave in predictable, repeatable ways.

What an MCP Server actually does

When you run an MCP server — like Terraform MCP Server — it acts as a context authority for your AI stack.

Here’s what it enables:

Context Management: Build structured, versioned context objects for each session or agent run

Policy Enforcement: Control who or what can modify parts of the context (e.g., only some users can change tools)

Audit Logging: Keep a complete trace of what data was injected and when

Routing and Optimization: Cleanly separate model selection logic from context creation

Reusability: Share standardized context schemas across agents or services

Instead of letting each agent “figure out” what to send to the model, the MCP server owns and structures the context flow.

Why letting agents freeform the prompt is a mistake

Let’s talk about a few of the reasons why letting agents free roam over the Internet may not be a good idea. Without MCP, your agents are just shoving blobs of text into models and they may get this text from many different places.

They might do things like repeat the same boilerplate instructions, bury important information under context that is not relevant, fail to include the right memory or file content, use outdated tool references, or even misinterpret what the user actually wants

Even worse there’s no way to debug what went wrong because the “prompt” is just one massive, undocumented chunk. With the Model Context Protocol, every part of the prompt is a structured field. It can be inspected, logged, tested, and versioned just like software.

How MCP makes your agents smarter and cheaper

As an example of real-world benefits, let me describe what happens when you integrate something like Terraform’s MCP Server into your agent workflow.

1. Token usage will drop - Because context blocks are filtered and structured, token use will be reduced per call.

2. Model performance improved - With context separated into instructions, memory, and files, even local models like Phi-3 and Mistral can deliver sharper responses. I have noticed that Claude desktop even responds more accurately, etc.

3. Debugging - Each session can have a traceable context object. When something breaks or doesn’t behave in the right way, you can see exactly what was passed in and why

4. Agents became modular - Because the protocol defines structure, you can build different agents that all speak the same “context language.” They became plug-and-play components, not one-offs

A spotlight on Terraform’s MCP Server

If you’re using Terraform to manage infrastructure you know how powerful it is but also how easy it is to get stuck writing modules, debugging syntax, or referencing outdated examples.

This is where Terraform’s MCP Server comes in. For me, this was one of the first MCP servers that I played around with since I deal with TF quite a bit, I could kill two birds with one stone - learn more about MCP and have more accurate and efficient Terraform code. It is not just a helper, but it is a context-aware AI companion that understands Terraform accurately.

t allows the model to receive information about your current working directory and Terraform files. It also knows about the Terraform providers you have installed and their versions. Where your state is located, configurations, modules, etc.

It acts as a proxy between your environment and the LLM, letting the model assist with tasks like:

Writing Terraform code from scratch

Suggesting changes based on your existing modules

Auto-filling provider-specific syntax

Explaining what a given HCL block does

Finding modules that match your intent from the Terraform Registry

Because the MCP server injects actual context from your local project, the model isn’t guessing from generic examples. It knows things like what version of the AWS provider you’re using, what variables already exist in your module, whether you’re deploying into prod, dev, or a test workspace, and things like what outputs your other modules expose

It makes the code no longer just copy and paste but an intelligent code assistant with the right context that you need.

Installing it in Claude Desktop

Create a claude_desktop_config.json file in your %appdata%/Claude directory in Windows that contains the following (check your OS for the location where this needs created):

{

"mcpServers": {

"terraform": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"hashicorp/terraform-mcp-server:latest"

]

}

}

}With this configuration, you will see under the Settings > Developer, you will see the terraform MCP server running and the arguments used for the Docker container.

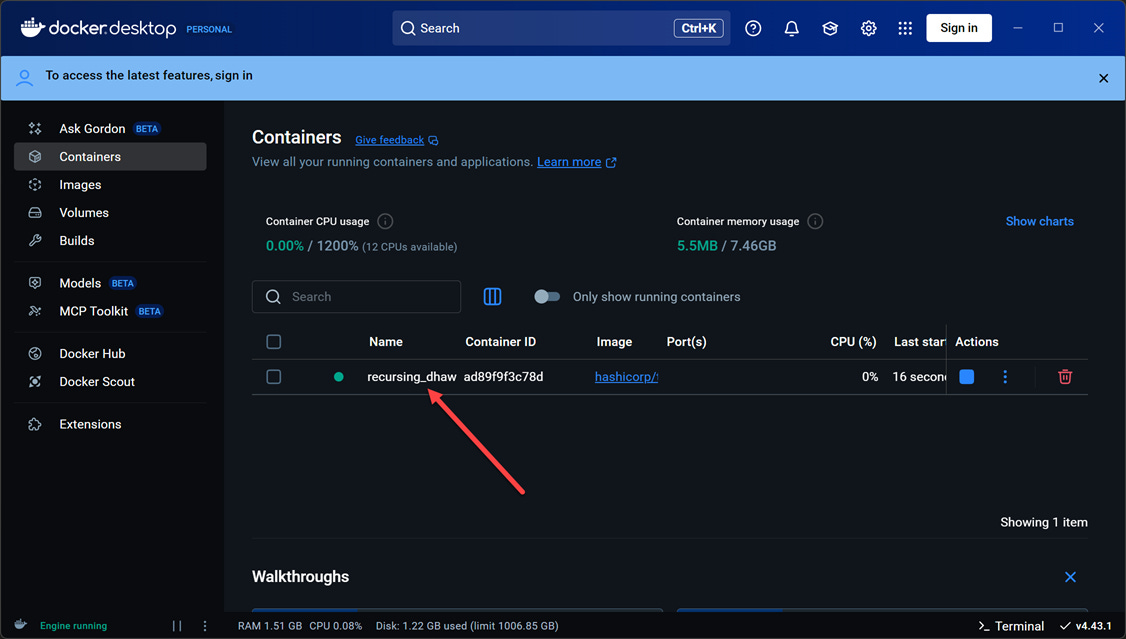

In Docker, you will see the container that was spun up as the MCP server.

And here’s the GitHub repo:

👉 github.com/hashicorp/terraform-mcp

Wrapping up

The Model Context Protocol MCP servers provide intelligent context for AI models to use. It allows you to have clean, structured, and modular context that can be optimized and audited. Most vendors are releasing MCP servers for their solutions that help to get the most out of using AI in an intelligent way. If you’re serious about building smart, scalable AI workflows start using MCP.

Keep reading with a 7-day free trial

Subscribe to Between the Clouds Newsletter to keep reading this post and get 7 days of free access to the full post archives.